Project description

With the advance of ultrasound transducer technology reducing sensor size, cost and power consumption while increasing bandwidth ultrasound based designs become a viable alternative in a range of new application areas.

To promote research and explore different use cases of ultrasound technology is one of the main aspirations of the European Horizon 2020 project “Silense”. In this context, applications for the automotive market, indoor navigation and smart home environment, to name a few, are investigated. As part of the Silense consortium our research focus lies on the realization of a wearable gesture recognition system.

The applications of wearable gesture recognition systems are manifold and some realizations of such ultrasound systems are on the market today already - for example in the gaming industry as human-machine interface control gadgets. This research takes the existing capabilities one step further, mainly in terms of accuracy and speed of measurements and the number of transducer nodes deployed in order to increase the space of coverage.

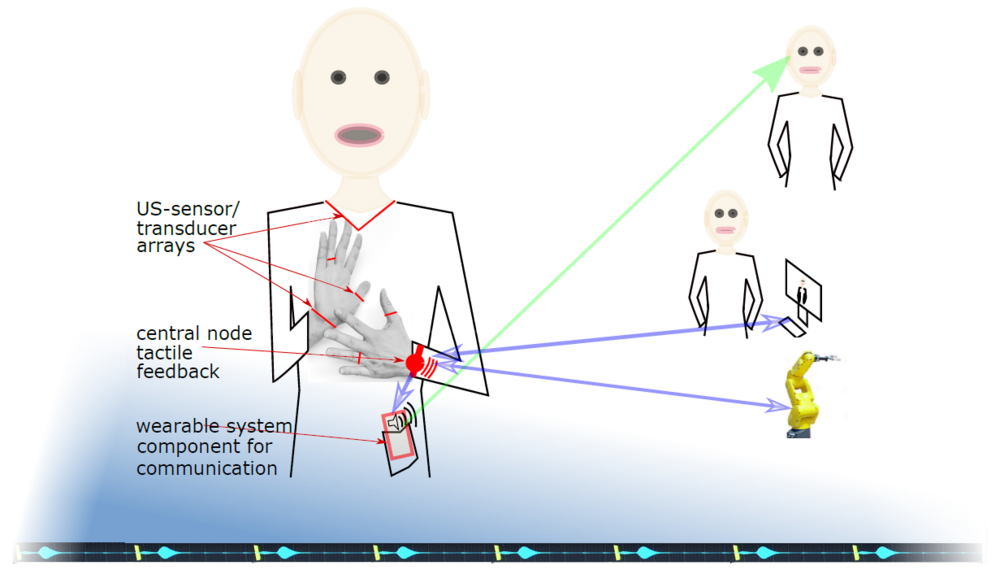

This will open new possibilities in a variety of use cases. In this project we target three application areas, namely, sign language interpretation, control of industrial robots and physio-therapeutic training aids to assist an accurate reproduction of the training exercises as instructed by the therapist.

Project Facts

ISP Research Team

Eugen Pfann, opens an external URL in a new window

Daniel Lagler

Funding

EU (ECSEL, opens an external URL in a new window project)

FFG, opens an external URL in a new window

Partners

Infineon Villach, opens an external URL in a new window

Infineon Munich, opens an external URL in a new window

LCM, opens an external URL in a new window Linz

Duration

May 2017 - Apr. 2020

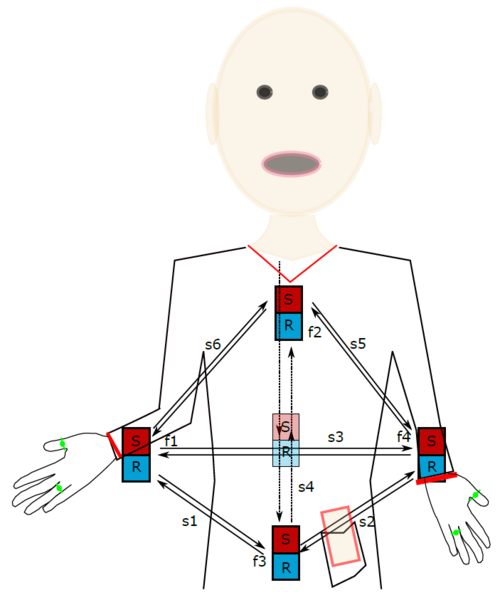

The figure below depicts an ultrasound gesture recognition system. It consists of a number of small nodes attached to wearable items such as rings, a necklace, a belt buckle or wrist bands. These nodes perform measurements on their relative location and motion and, pass the acquired information to a central unit.

The main focus in this project is to devise the signal processing algorithms to provide exact location and motion measurements and to extract the required information from the raw data. This includes array signal processing, sensor signal fusion and motion tracking. Appropriate features are extracted from the recorded data and serve as input for machine learning algorithms in order to yield information on the executed gestures.